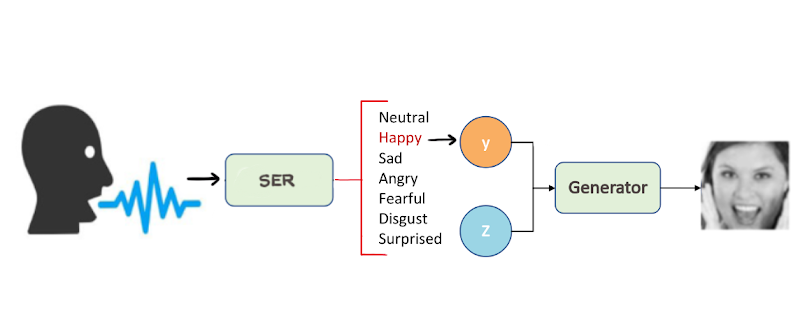

The aim of this project is to develop and combine two standalone deep learning techniques - Speech Emotion Recognition (SER) and Conditional GAN (cGAN) to develop an end-to-end system for generating human faces using cGAN based on emotions identified from human speech using SER. The project pipeline can be summarized as follows:

The project demo is shown below:Dataset:

For SER, the dataset we used is RAVDESS. There are 1440 speech files and 1012 song files from RAVDESS. This dataset includes recordings of 24 professional actors (12 female, 12 male), vocalizing two lexically-matched statements in a neutral North American accent. Speech includes a total of 8 types of emotions represented by integers as follows: 01 = neutral, 02 = calm, 03 = happy, 04 = sad, 05 = angry, 06 = fearful, 07 = disgust, 08 = surprised. All these emotions are further repeated for both the genders. Since we are interested only in the speech data, we dropped the song data. The audio files were made gender agnostic by merging files of both the genders.

For cGAN, the dataset we used is hosted on Kaggle as part of Facial Expression Recognition 2013 (FER-2013) Challenge. It is available for download[1]. It consists of 35,887 examples, where each image is a 48x48 pixel grayscale image of faces. Each of the images is tagged with the emotion it represents which is an integer: 0=Angry, 1=Disgust, 2=Fear, 3=Happy, 4=Sad, 5=Surprise, 6=Neutral. These emotions were used as conditions while generating human faces.

Since the emotion predicted by SER is to be fed to cGAN for generating faces conditioned on the given emotion, we had to map the emotions in the RAVDESS dataset to the ones in the cGAN dataset. Hence we had to drop those audio files from the RAVDESS dataset which corresponds to “calm” emotion since this emotion is absent in the FER2013 dataset.

Modelling:

The end-to-end pipeline consists of solving both the subproblems : SER and conditional image generation, separately. Hence it consists of training two separate models for each of the subproblems. During the prediction step, the output of SER is feeded to cGAN to generate images for the input emotion.

SER Model:

To process and generate features from audio files, we have used the librosa library in python. As features for the audio, we have used MFCCs (Mel Frequency Cepstral Coefficient). This is the state-of-the-art set of features when it comes to analysing any audio. Using MFCCs as the features, each of the audio files was converted to a 259 dimensional vector. The labels were integers which were converted to one-hot encoding format. The best performing architecture is shown below:

Since it's a classification task with temporal properties in the data, we have used 1D Convolutional Neural Network.

cGAN Model:

The conditional architecture also consists of discriminator and a generator. The one difference is that both the models are conditioned on a label or a variable. The gan network maps latent space vectors to the discriminator’s assessment of the realism of these latent vectors as decoded by the generator.

Result:

The maximum accuracy achieved in the SER model is 83.4% on the train and 45.8% on the test data corresponding to the architecture consisting of 6 1D Conv layers.

The model with four convolution layers in the discriminator gave the maximum accuracy of all the three experiments. The generated images corresponding to each of the emotions are shown below.

Comments

Post a Comment